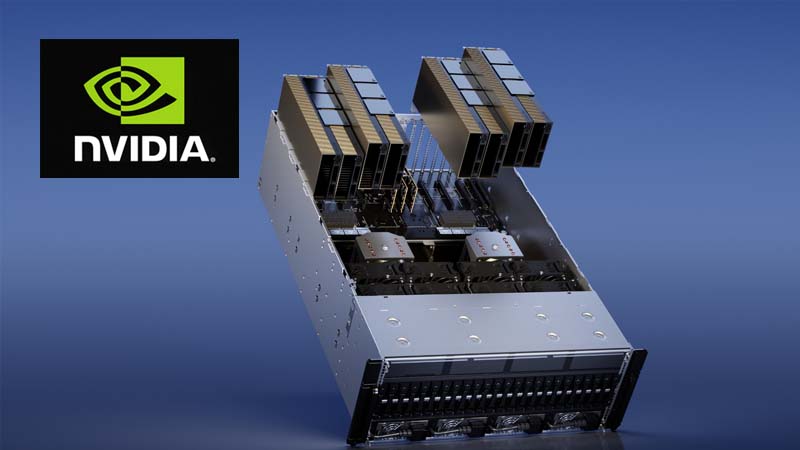

In a dramatic shift from the norm, a graphics processing unit (GPU) by Nvidia Corp., known as the H100, released in 2023, has become a transformative force in the world of business and technology. This chip has not only catapulted Nvidia’s value by over $1 trillion but has also positioned the company as a pivotal player in the AI revolution. The H100’s impact is so profound that it’s redefining industry expectations, with customers experiencing up to six-month waits due to its high demand.

The H100, a homage to computing icon Grace Hopper, represents a significant leap in GPU technology. While traditionally serving the gaming community, the H100 breaks the mold, offering unparalleled capabilities in processing vast data volumes at extraordinary speeds. This makes it ideal for the rigorous demands of AI model training. Nvidia’s foresight in the early 2000s to adapt its GPUs for broader applications has paved the way for this success.

What sets the H100 apart is its ability to expedite the training of large language models (LLMs). These AI platforms, which learn to perform complex tasks like text translation and image synthesis, require immense computational power. The H100 delivers this, being four times faster than its predecessor, the A100, in training LLMs, and 30 times quicker in responding to user prompts. Such performance is crucial for companies racing to develop new AI capabilities.

Nvidia’s journey to AI leadership began in Santa Clara, California, where it pioneered the graphics chip industry. The company’s GPUs, known for rendering detailed images on screens, were repurposed in the early 2000s for broader computational tasks. This innovation was a game-changer for AI research, making practical applications possible.

Despite attempts by other tech giants and chipmakers, such as Amazon’s AWS, Google Cloud, Microsoft’s Azure, Advanced Micro Devices Inc. (AMD), and Intel Corp., Nvidia maintains an 80% market share in AI data center accelerators. Its success lies in its ability to rapidly update both hardware and supporting software, outpacing competitors. Furthermore, Nvidia has crafted cluster systems that enable quick deployment of H100s in bulk. Its data center division reported an 81% revenue increase to $22 billion in the last quarter of 2023, highlighting its dominance.

AMD and Intel, while significant players in the chip market, are still trailing Nvidia in the AI sector. AMD’s Instinct line, including the MI300X, targets Nvidia’s market share, boasting enhanced memory for AI workloads. However, Nvidia’s edge goes beyond hardware performance. Its invention of CUDA, a programming language for its GPUs, is crucial for AI applications.

Looking ahead, Nvidia plans to release the H200, followed by a more radically designed B100. CEO Jensen Huang emphasizes the importance of early adoption of these technologies by both governments and private enterprises to stay competitive in the AI race. Nvidia’s strategy is clear: by establishing its technology as the foundation for AI projects, it not only leads the current market but also secures its future through customer loyalty and ongoing upgrades.

In conclusion, the Nvidia H100 is not just a chip; it’s a catalyst for a new era in artificial intelligence and computing, reshaping industries and setting new standards for technological innovation.